Mastering Large File Handling: Your Guide to Tackling Big Data in MySQL

Hi Data Lovers

A few months ago, I started working on a SQL project given to us by Scaler. There were around 8 files; the smallest one had a size of 170 KB, and the biggest one had 58 MB.

I knew two methods of importing datasets into MySQL Workbench.

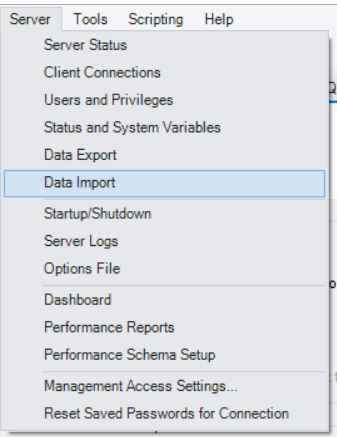

1) Importing .sql extension file from server tab

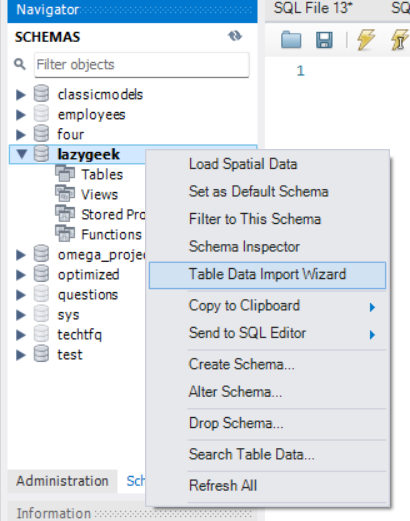

2) Using Table Data Import Wizard method

Since the dataset given to us was in CSV format, I decided to use the second method, which is used for importing data from CSV and JSON file formats.

I started with the smallest one, and it got imported into Workbench in just a few minutes. That’s a good thing, right? But when I moved on to larger files, I started to lose my patience. For a file that was around 16 MB, it took more than an hour to upload. Okay, stay patient, I told myself. After that file finally finished, I started uploading the one that was around 58 MB in size. I waited for an hour, then a second hour, then a third hour, a fourth hour, and finally a fifth hour.

1

2

“He that can have patience can have what he will.”

― Benjamin Franklin

Sorry Franklin sir, It’s not working for me

Now, I have realized that this is not the right way to proceed. I talked to a few of my peers, and they were also facing the same issue. One friend of mine told me that he has been trying to upload the dataset since yesterday.

However, he is facing issues like errors, and the number of rows is significantly less than the original files after finishing the upload. I don’t know about other things, but his patience is truly commendable. I Salute him

Then I started exploring the documentation for MySQL and Stack Overflow to find an easy solution to this headache. After spending more than two hours reading and experimenting, I found a solution. With that method, it took around an hour to upload all eight files without any reduction in data size. That’s pretty awesome, right?

Image by StartupStockPhotos from Pixabay

Image by StartupStockPhotos from Pixabay

Exactly seven months later, I faced the same issue, but this time the file size was not in megabytes but in gigabytes. However, this time I knew the drill. Uploading the dataset was a cakewalk now. Below are the new steps that this weird situation taught me

3) Loading Data From Command Prompt/Terminal

You need to create the structure of the table using the Create statement which I’m assuming you know, If you don’t refer to this mysql doc.

After Creating the table and before uploading the dataset, we need to do some tweaks, which I have illustrated using the following figures.

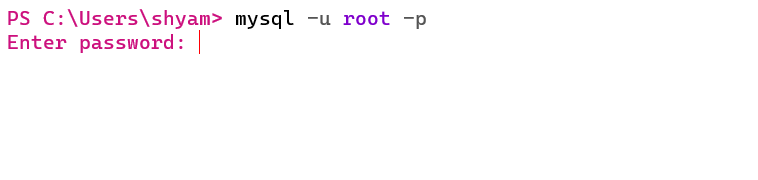

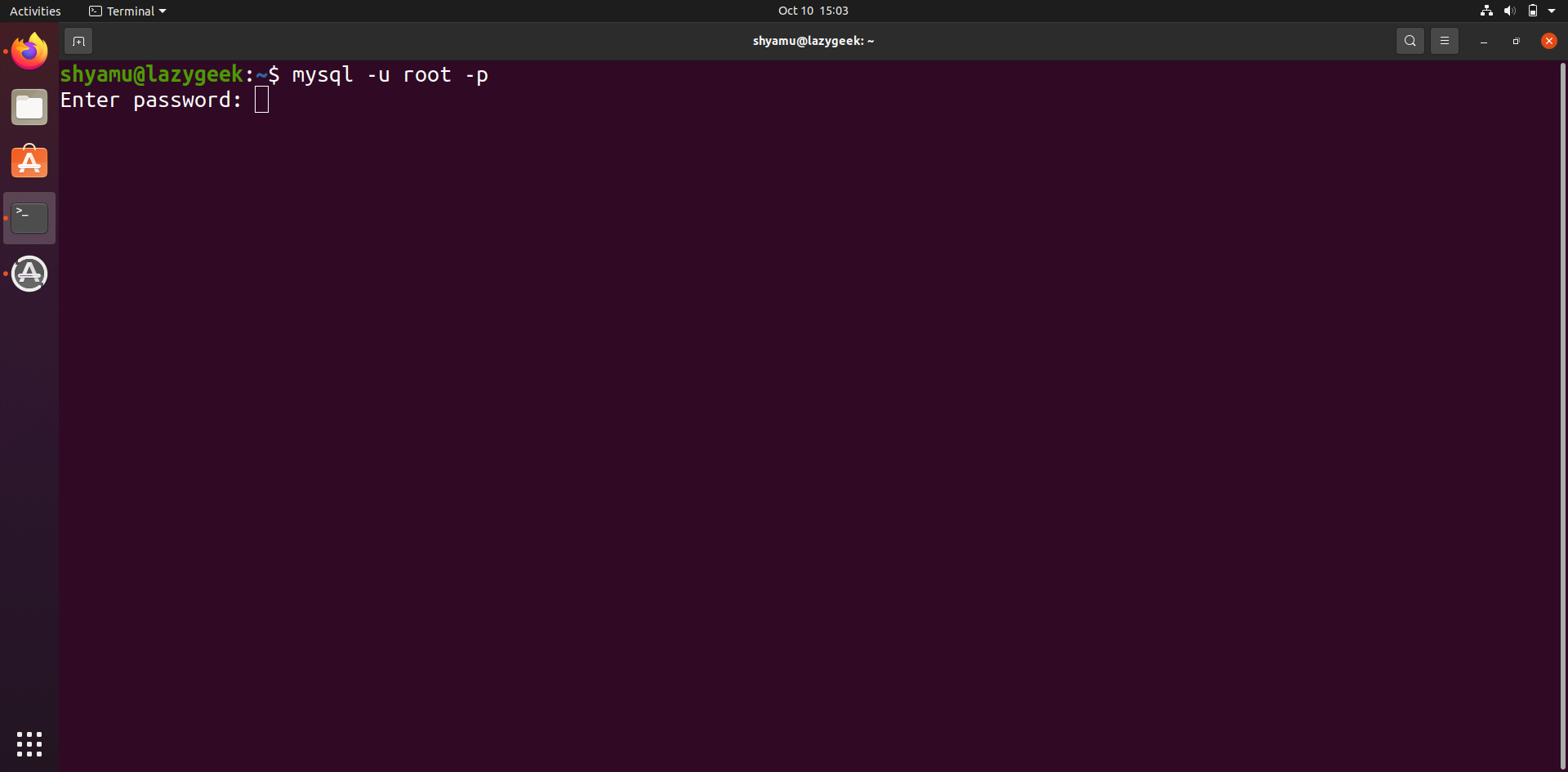

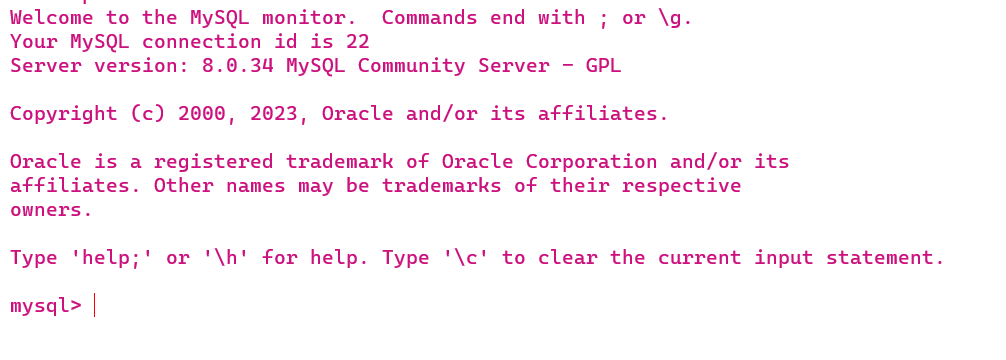

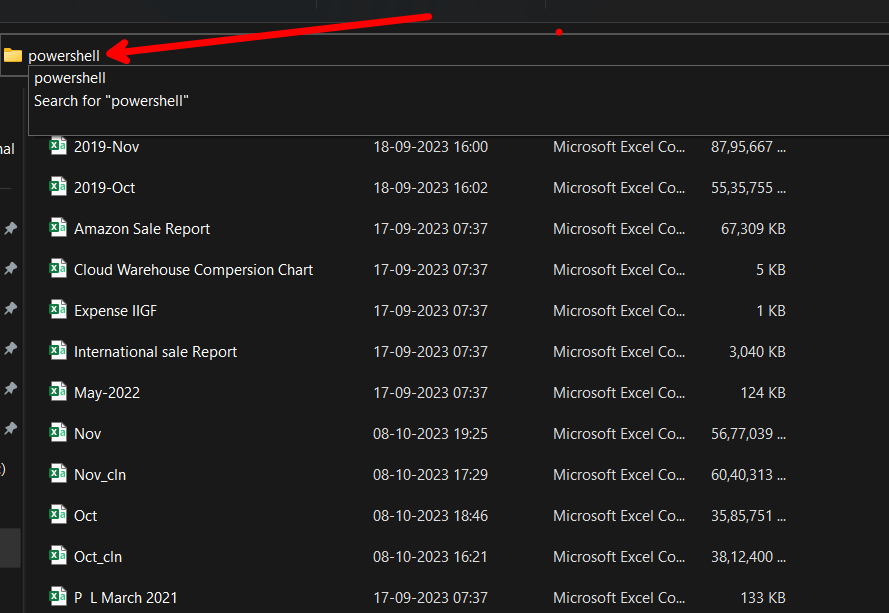

Open Your Command Prompts/Powershell, For Linux/Unix/Mac user do the same by opening your terminals

1

mysql -u root -p

After pressing enter, it will ask your mysql server password for login

Fill your password and press enter, for linux and unix users, they will see nothing while entering their password, it’s unix/linux way of putting extra security.

You’ll see the below window after successful login

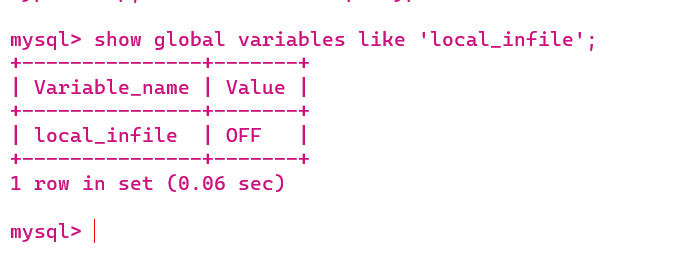

Now enter the below command to check the value of variable_name

1

show global variables like 'local_infile';

To set this from OFF to ON, enter the following command

1

set global local_infile=true;

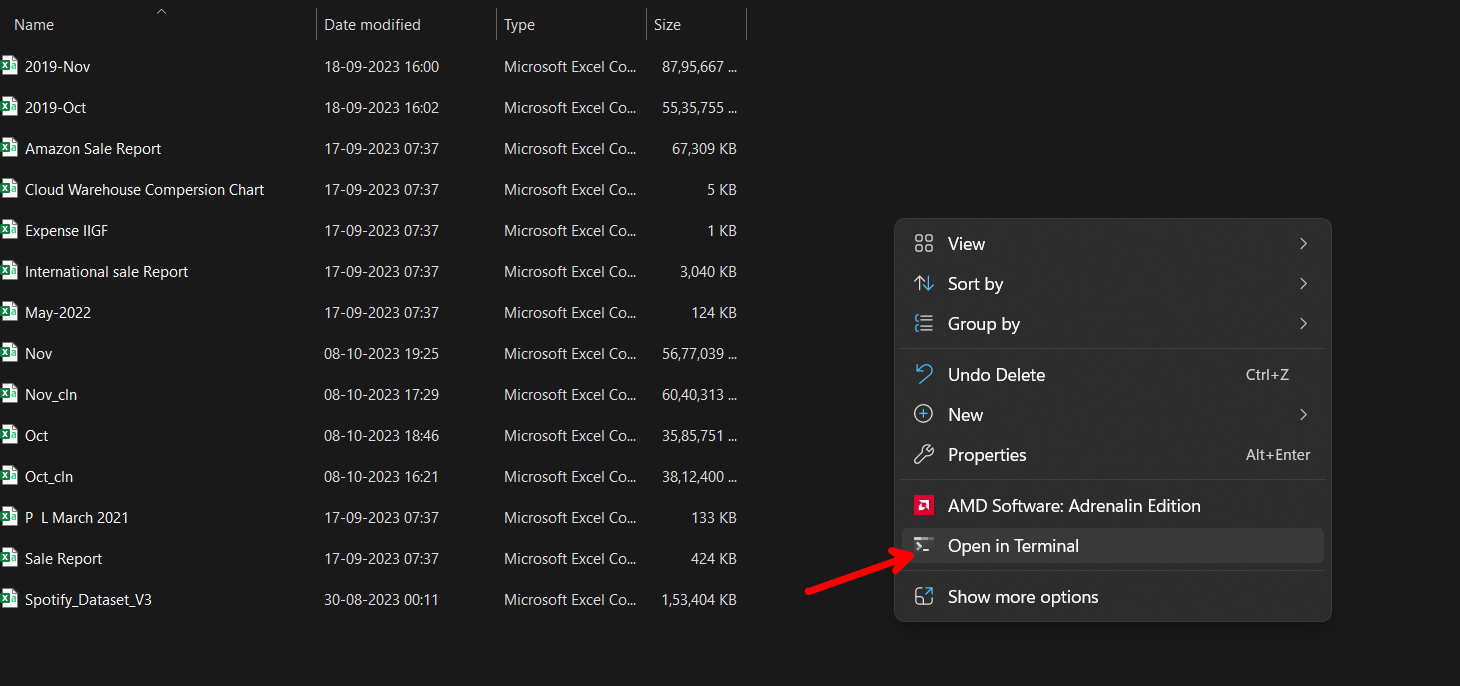

Well Done, we have finished major part of the job. Now go to the folder where your dataset is present using command prompt/Powershell or terminal. If it’s tough for you, go to the folder where your dataset is present and open your powershell/terminal from there. Now, there are two methods of doing this,

1) Right click on the empty space area and select Open in Terminal

2) Go to your path, and enter powershell

Now it’s time to upload the dataset, enter the below command to login

1

mysql --local-infile=1 -u root -p

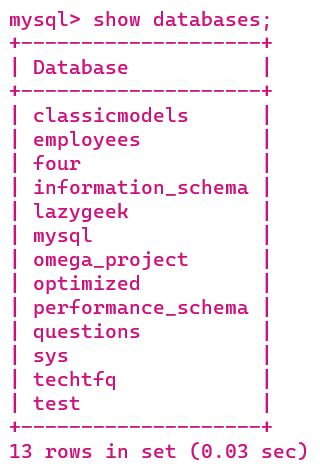

To see all the datasets available in your database, use the following command

1

show databases;

To select your desired database, use the following command

1

use yourdatabase

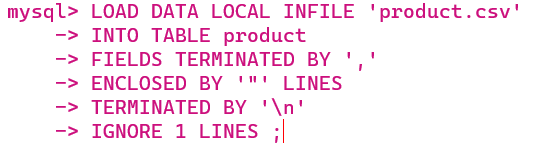

After selecting the database, the following command will start uploading the dataset

1

2

3

4

5

6

LOAD DATA LOCAL INFILE 'filename'

INTO TABLE tablename

FIELDS TERMINATED BY ','

ENCLOSED BY '"' LINES

TERMINATED BY '\n'

IGNORE 1 LINES ;

Change filename in the first line to the name of your original file with extension, also change the table name in the second line to the table name which you have created earlier.

Illustrated Example:

The LOAD DATA reads text from a file using a very high speed, it is 20 times more faster than insert statement

If you want to save time go with this method

While there is a potential security risk associated with using load data local at a higher level, but this aspect is currently not our primary goal. If need arises, we will delve into this topic in our upcoming articles. Nevertheless, it is a excellent method for uploading datasets, whether they are in CSV, TSV, or any other delimited format, as it performs exceptionally well.

That’s all from this side

Refereces

1) mysql doc